Now it was time to construct an algorithm that could tease some conclusions from this mass of data. ‘What might a cheating teacher’s classroom look like?(Freakonomics: A Rogue Economist

The first thing to search for would be unusual answer patterns in a given classroom: blocks of identical answers, for instance, especially among the harder questions. If ten very bright students (as indicated by past and future test scores) gave correct answers to the exam’s first five questions (typically the easiest ones), such an identical block shouldn’t be considered suspicious. But if ten poor students gave correct answers to the last five questions on the exam (the hardest ones), that’s worth looking into. Another red flag would be a strange pattern within any one student’s exam—such as getting the hard questions right while missing the easy ones—especially when measured against the thousands of students in other classrooms who scored similarly on the same test. Furthermore, the algorithm would seek out a classroom full of students who performed far better than their past scores would have predicted and who then went on to score significantly lower the following year. A dramatic one-year spike in test scores might initially be attributed to a good teacher; but with a dramatic fall to follow, there’s a strong likelihood that the spike was brought about by artificial means.[Emphasis added, more on numerous pointed points that seem to be artifacts of benevolent design tomorrow.]

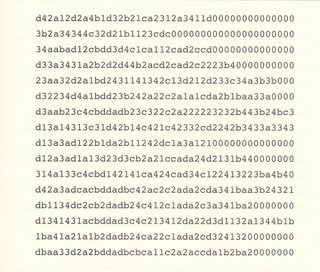

Consider now the answer strings from the students in two sixth grade Chicago classrooms who took the identical math test. Each horizontal row represents one student’s answers. The letter a, b, c, or d indicates a correct answer; a number indicates a wrong answer, with 1 corresponding to a, 2 corresponding to b, and so on. A zero represents an answer that was left blank. One of these classrooms almost certainly had a cheating teacher and the other did not. Try to tell the difference-although be forewarned that it's not easy with the naked eye.

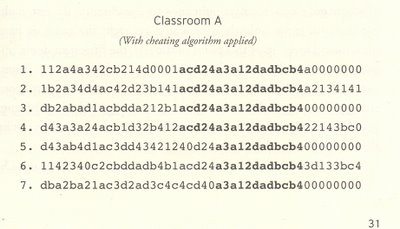

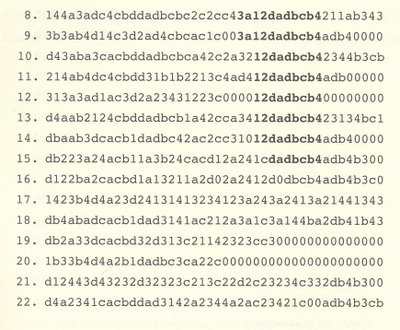

If you guessed that classroom A was the cheating classroom, congratulations. Here again are the answer strings from classroom A, now reordered by a computer that has been asked to apply the cheating algorithm and seek out suspicious patterns.

Take a look at the answers in bold. Did fifteen out of twenty-two students somehow manage to reel off the same six consecutive correct answers (the d-a-d-b-c-b string) all by themselves?

There are at least four reasons this is unlikely. One: those questions, coming near the end of the test, were harder than the earlier questions. Two: these were mainly subpar students to begin with, few of whom got six consecutive right answers elsewhere on the test, making it all the more unlikely they would get right the same six hard questions. Three: up to this point in the test, the fifteen students’ answers were virtually uncorrelated. Four: three of the students (numbers 1, 9, and 12) left at least one answer blank before the suspicious string and then ended the test with another string of blanks. This suggests that a long, unbroken string of blank answers was broken not by the student but by the teacher.

There is another oddity about the suspicious answer string. On nine of the fifteen tests, the six correct answers are preceded by another identical string, 3-a-1-2, which includes three of four incorrect answers. And on all fifteen tests, the six correct answers are followed by the same incorrect answer, a 4. Why on earth would a cheating teacher go to the trouble of erasing a student’s test sheet and then fill in the wrong answer?

Perhaps she is merely being strategic. In case she is caught and hauled into the principal’s office, she could point to the wrong answers as proof that she didn’t cheat. Or perhaps—and this is a less charitable but just as likely answer—she doesn’t know the right answers herself. (With standardized tests, the teacher is typically not given an answer key.) If this is the case, then we have a pretty good clue as to why her students are in need of inflated grades in the first place: they have a bad teacher.

Explores the Hidden Side of Everything

by Steven D. Levitt and Stephen J. Dubner :29-33)

Public school teachers are not allowed to teach about ID in public schools...fortunately, for a number of reasons. So come close public highschool students and I'll whisper some information about ID, it is a tool you can use to catch some teachers. As a science it comports with the idea that information and intelligence are irreducible and perhaps metaphysical just as the philosophers thought. There are implications with respect to education in that. After all, how much State funding did Socrates and other philosophers who adhered to intelligent design need? At the end he noted sardonically that he deserved it, yet he did not receive it.

Note the State schools:

An analysis of the entire Chicago data reveals evidence of teacher cheating in more than two hundred classrooms per year, roughly 5 percent of the total. This is a conservative estimate, since the algorithm was able to identify only the most egregious form of cheating— in which teachers systematically changed students’ answers—and not the many subtler ways a teacher might cheat. In a recent study among North Carolina schoolteachers, some 35 percent of the respondents said they had witnessed their colleagues cheating in some fashion, whether by giving students extra time, suggesting answers, or manually changing students’ answers.(Ib. :35)

What are the characteristics of a cheating teacher? The Chicago data show that male and female teachers are about equally prone to cheating. A cheating teacher tends to be younger and less qualified than average. She is also more likely to cheat after her incentives change. Because the Chicago data ran from 1993 to 2000, it bracketed the introduction of high-stakes testing in 1996. Sure enough, there was a pronounced spike in cheating in 1996. Nor was the cheating random. It was the teachers in the lowest-scoring classrooms who were most likely to cheat. It should also be noted that the $25,000 bonus for California teachers was eventually revoked, in part because of suspicions that too much of the money was going to cheaters.

No comments:

Post a Comment